Community Embraces New Word Game at Mid-Year Play Day This past Sunday, families at Takoma Park’s Seventh Annual Mid-Year Play Day had the opportunity to experience OtherWordly for the first time. Our educational language game drew curious children and parents to our table throughout the afternoon. Words in Space Several children gathered around our iPads […]

Read moreThe Linguabase is the underlying data that powers In Other Words and OtherWordly. It maps how English words connect through weighted relationships—not just synonyms, but categorical relationships (apple → fruit), functional connections (apple → orchard), and cultural associations (apple → pie).

The Small World of Language

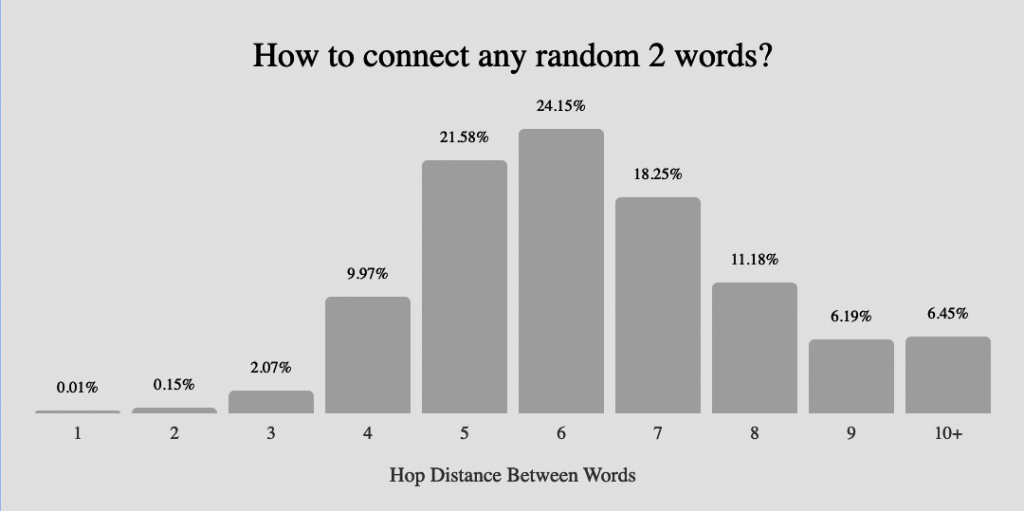

Our most significant discovery: 76% of random English word pairs connect in seven hops or fewer, with an average path length of just 6.43 steps. This “small world” property explains why players can intuitively navigate from “Batman” to “inspect” through meaningful connections like vigilante → watchful → circumspect. Nearly any two words connect through chains of meaning—a mathematical property that makes our games possible.

Research Journey

2013–2014: Early computational linguistics work on NSF-funded supercomputing resources, processing large-scale language data using LDA topic modeling across massive text corpora.

2017: Began systematically building a database of word associations, combining algorithmic extraction with human curation by our lexicographic team.

2023: GPT-4 arrived—both a threat and an opportunity. It threatened the original visual thesaurus business model (why pay for word relationships when AI generates them on demand?). But it also enabled rapid validation and expansion. We used LLMs not to generate associations from scratch, but to evaluate and rank relationships, resolve polysemy, and audit for false connections—80 million API calls focused on recognition rather than generation.

Result: A semantic network of 1.5 million words and approximately 100 million weighted cross-links. The top 400,000 terms power our apps.

A Hybrid Approach

The Linguabase combines three sources of human knowledge with LLM-assisted processing:

- In-house lexicographic work — 55,000 human-curated word lists covering 5,000+ topics, created by our team of freelance linguists and graduate students. This work discovered 394,000 relationships that LLM-generated data missed.

- Library of Congress classifications — 648,460 classifications from 17 million books since 1897. “Coffee” appears in 2,542 classifications, revealing connections to fair-trade economics and agricultural policy—not just beverages.

- 70+ existing references — Wiktionary, WordNet, NASA thesaurus, National Library of Medicine’s UMLS, Getty Art & Architecture, and specialized domain resources.

The key insight: LLMs are better at evaluation than generation for building expansive ranked data about word relations. We use them to validate relationships, remove false connections (291,000 removed through LLM auditing), and expand coverage—not to generate associations from scratch, which produces generic, predictable results.

Each term connects to an average of 40 associations, ranked down to 17 playable choices through a multi-signal system combining frequency weighting, co-occurrence patterns, manual curation scores, and cross-source validation.

Multiple Meanings as Network Bridges

English words often carry multiple meanings, creating natural bridges in our network:

- Homographs — Identical spelling, different meanings: “bass” (sound) vs. “bass” (fish). English has 1,000–3,000 of these.

- Polysemes — Multiple related definitions from the same root: “head” as body part, leader, or ship’s bow.

- Contextual flavors — Same core meaning, different associations: “hiking” can emphasize nature (scenery, trails) or exercise (fitness, exertion).

These multi-sense words don’t create shortcuts in our network—they offer creative routing options for navigating semantic space. Analysis of 31,387 homograph-containing paths showed no efficiency advantage over standard paths.

Scale in Context

Webster’s Third New International Dictionary (1961) required 757 editor-years and $3.5 million—over $50 million in today’s dollars—to compile 476,000 entries. The Oxford English Dictionary took 70 years and thousands of contributors.

What previous generations of lexicographers built over centuries, we achieved through a combination of human expertise, systematic data aggregation, and AI-assisted expansion. But more than scale, the Linguabase represents a new way of thinking about language—not as a list of definitions, but as a navigable network of meaning.

The Team

Michael Douma, Greg Ligierko, Li Mei, and Orin Hargraves

Funding

- NSF XSEDE Supercomputer Grant (#IRI130011, 2013–2014) — 296,000 compute hours for foundational NLP processing

- AWS Startups (2024) — $1,000 cloud credits

- Microsoft for Nonprofits (2023–2025) — $380,000 in Azure credits for LLM token processing